February 16, 2026

Lessons from Kaigi on Rails 2025 — Shohei Kobayashi

In large Rails systems, background jobs are not a detail — they are the system. Email delivery, AI processing, document generation, data cleanup, notifications, analytics pipelines — everything heavy runs asynchronously.

At Kaigi on Rails 2025, Site Reliability Engineer Shohei Kobayashi (@srockstyle) presented a rare, deeply practical story: the full migration of a mature production system from Delayed Job to Solid Queue, Rails’ modern first-party backend for Active Job.

This was not a toy example. It was a decade-old application under real production load.

The Context: A Long-Lived Rails System

The application discussed had been running for over 10 years:

- Originally released in 2012 (Rails 3.2.1)

- Upgraded to Rails 7.1 by 2024

- Heavy reliance on asynchronous processing

- Mixed legacy and modern patterns

- Production infrastructure at scale

As the presentation notes, it was a “Rails application released more than 10 years ago,” evolving across multiple Rails generations. 非同期処理移行の話_kaigionrails2025

Over time, background jobs accumulated into a critical subsystem handling operations such as:

- Initial content generation

- Chatbot interactions

- Email broadcasting

- Data updates

- Customer deletion workflows

- AI-assisted manual creation

- Media processing

In other words: everything expensive.

How Background Jobs Actually Work (Refresher)

Kobayashi begins with the classic asynchronous flow:

- User performs an action

- A job is enqueued in the database

- The user receives a fast response

- A background worker processes the job

- Results are stored back in the database

This architecture is foundational to scalable web apps — but its implementation determines whether scaling actually works.

The Hidden Failure: Why Delayed Job Stops Scaling

Delayed Job stores jobs in the database and workers fetch them using row locks.

At first glance, adding workers should increase throughput.

In reality, it often doesn’t.

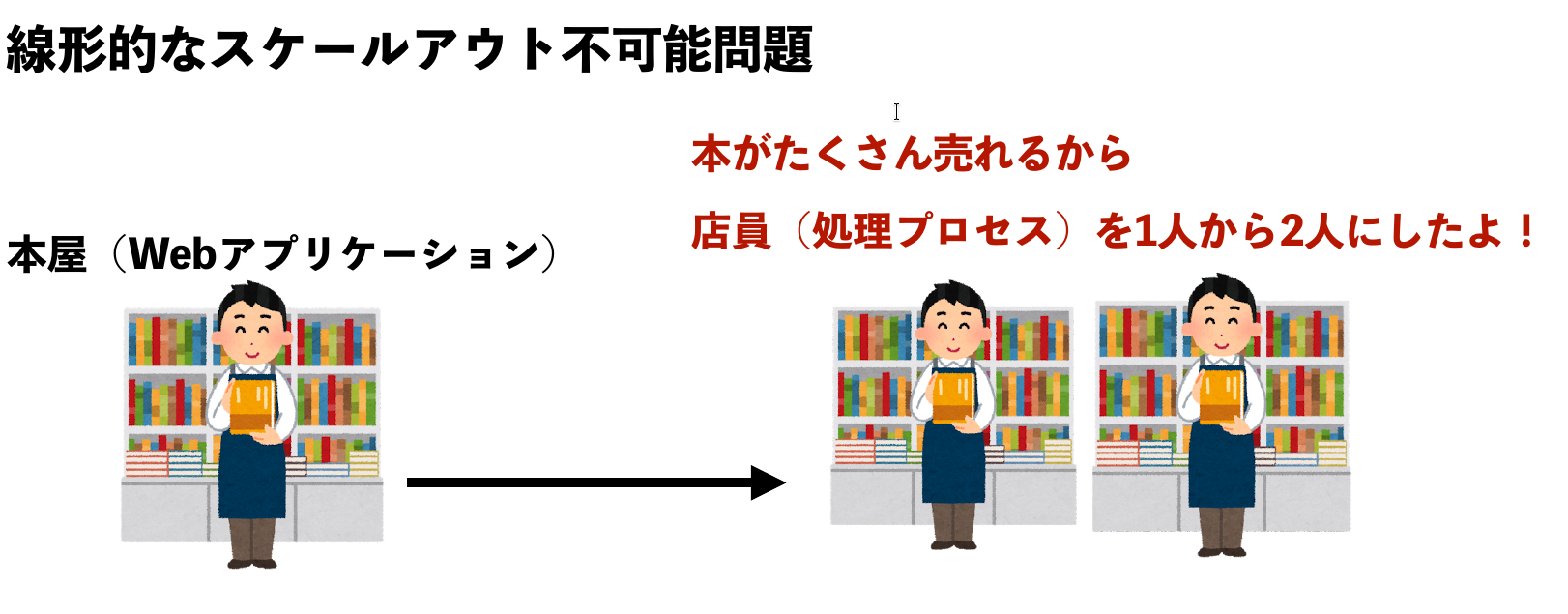

The “One Calculator” Problem

The presentation uses a brilliant analogy:

Hiring more clerks does not double throughput if they must share a single calculator.

In database terms:

- Workers compete for the same rows

- One worker locks a job row

- Others wait for the transaction to finish

- Idle time accumulates

- Throughput plateaus

A simplified query pattern looks like:

SELECT * FROM jobsWHERE processed = 'no'FOR UPDATELIMIT 1;Only one worker can lock that row. Others stall.

The presentation explicitly shows that additional workers become “waiting workers” until the transaction commits, meaning performance does not scale linearly. 非同期処理移行の話_kaigionrails2025

Two Types of Asynchronous Work

A key insight from the talk: not all background jobs behave the same.

Type A — Long-Running Jobs

Examples:

- Initial group creation

- Account deletion

- Large data operations

These tolerate latency.

Type B — “Almost Real-Time” Jobs

Examples:

- Email notifications

- Chatbot responses

- AI generation

- System updates

These must execute quickly despite being asynchronous.

A system optimized only for one type fails the other.

Operational Goals of the Migration

The team defined concrete requirements:

- True horizontal scalability

- Increased throughput

- Efficient resource utilization

- Priority control

- Queue isolation per job type

- Unified scheduling system

- Migration to Active Job

- Alignment with “Rails Way”

- Reduced operational complexity

In short: make the job system predictable, scalable, and maintainable.

Why Not Sidekiq Pro?

Sidekiq Pro is powerful — arguably the industry standard — but it was not chosen.

Reasons included:

- Requires Redis (new infrastructure)

- Additional AWS resources

- Licensing cost

- Migration complexity

- Some features not fully accessible via Active Job

The team had a guiding SRE principle:

Do not increase the number of operational components unnecessarily.

Enter Solid Queue

Solid Queue is Rails’ official database-backed job system.

It satisfies several constraints simultaneously:

- Uses existing database infrastructure

- Integrates natively with Active Job

- Supports multiple queues

- Provides scheduling

- Requires no external services

- Scales better than traditional DB-queue systems

The presentation highlights that Solid Queue is developed by the Rails core team and designed for modern Rails applications. 非同期処理移行の話_kaigionrails2025

The Critical Innovation: SKIP LOCKED

Solid Queue solves the core scaling problem with a different locking strategy:

SELECT * FROM jobs_tableFOR UPDATE SKIP LOCKEDLIMIT 1;This means:

- Workers skip rows already locked by others

- No blocking wait

- Workers immediately fetch different jobs

- Parallelism emerges naturally

Returning to the analogy:

Each clerk now has their own calculator.

The result: throughput increases roughly proportionally with worker count.

Infrastructure Simplicity

Another major advantage:

No new infrastructure required.

The team simply created a new database within an existing Aurora instance.

No Redis clusters. No additional services to monitor. No new operational burden.

For organizations prioritizing reliability over novelty, this is decisive.

Migration Strategy (Real-World Safe Approach)

Large systems cannot flip job backends overnight.

The migration proceeded in stages:

1) Eliminate Delayed Job-Specific APIs

Legacy patterns like:

MyClass.delay.do_somethingcreate tight coupling.

These had to be removed first.

2) Standardize on Active Job

Jobs were rewritten as explicit Active Job classes:

class MailJob < ApplicationJob queue_as :mail def perform(user_id:) ... endendDuring transition, jobs could still use the old backend by specifying:

self.queue_adapter = :delayedThis allowed gradual rollout without breaking production.

3) Introduce Solid Queue Configuration

Workers and dispatchers were configured per queue:

- Separate queues for different workloads

- Adjustable polling intervals

- Tunable concurrency

- Process isolation

4) Switch Jobs to the New Backend

Once validated, jobs were pointed to Solid Queue.

5) Remove Delayed Job Completely

Only after stability was confirmed was the legacy system removed.

Observability: The Missing Piece

One weakness of Solid Queue highlighted in the talk:

It does not provide built-in monitoring.

Delayed Job exposes many metrics automatically. Solid Queue does not.

The team implemented custom metrics including:

Queue State Metrics

- Number of pending jobs

- Scheduled jobs

- Running jobs

- Retry counts

Performance Metrics

- Throughput per minute

- Maximum wait time

- Median wait time

- Execution duration

- Lock age

Monitoring these values is essential for detecting backlogs and failures.

Understanding the Internal Job Lifecycle

To operate Solid Queue effectively, the team studied its internal tables:

- Scheduled jobs

- Ready executions

- Claimed executions

- Failed executions

Knowing where a job resides reveals its exact state.

This knowledge is crucial for troubleshooting production incidents.

The Outcome: Linear Scaling Achieved

With Solid Queue:

- Workers no longer block each other

- Throughput scales predictably

- Infrastructure complexity remains low

- Migration risk is controlled

- Legacy patterns are eliminated

The job system becomes a stable, scalable subsystem rather than a hidden bottleneck.

Why This Story Matters to the Rails Ecosystem

This presentation reflects a broader trend:

Rails is reclaiming core infrastructure responsibilities.

Instead of relying exclusively on external services, modern Rails offers first-party solutions that are:

- Good enough for large systems

- Easier to operate

- Deeply integrated

- Cost-efficient

- Predictable

Solid Queue represents a shift toward batteries-included architecture for asynchronous processing.

Final Thoughts

The migration described by Shohei Kobayashi at Kaigi on Rails 2025 is more than a tooling change. It is a case study in how mature systems evolve:

- Identify real bottlenecks

- Define operational goals

- Choose tools aligned with constraints

- Migrate incrementally

- Measure everything

Most importantly, it demonstrates that database-backed queues can scale — if designed correctly.

For teams maintaining long-lived Rails applications, this is not theoretical advice. It is a roadmap.