February 12, 2026

Eliminating Connection Pool Exhaustion in Production

At Kaigi on Rails 2025, 片田 恭平 (@katakyo) delivered a deeply practical talk titled:

“もう並列実行は怖くない — コネクション枯渇解消のための実践的アプローチ” (“Parallel Execution Is No Longer Scary — A Practical Approach to Eliminating Connection Pool Exhaustion”) もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

This session explored a real-world scaling problem inside a production Rails system and walked through the technical, architectural, and operational lessons learned while introducing parallel processing safely.

This article distills the core technical insights from that presentation, with explicit reference to the production context and the design constraints discussed at the conference.

1. The Real-World Context

The case study comes from mybest, a large-scale product comparison platform handling millions of product records monthly (as described early in the presentation) もう並列実行は怖くない__コネクション枯渇解消のための実践的ア….

Their backend stack:

- Ruby on Rails 8.0

- Rake batch tasks

- Sidekiq for async jobs

- AWS ECS

- Amazon RDS

- parallel gem for concurrency もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

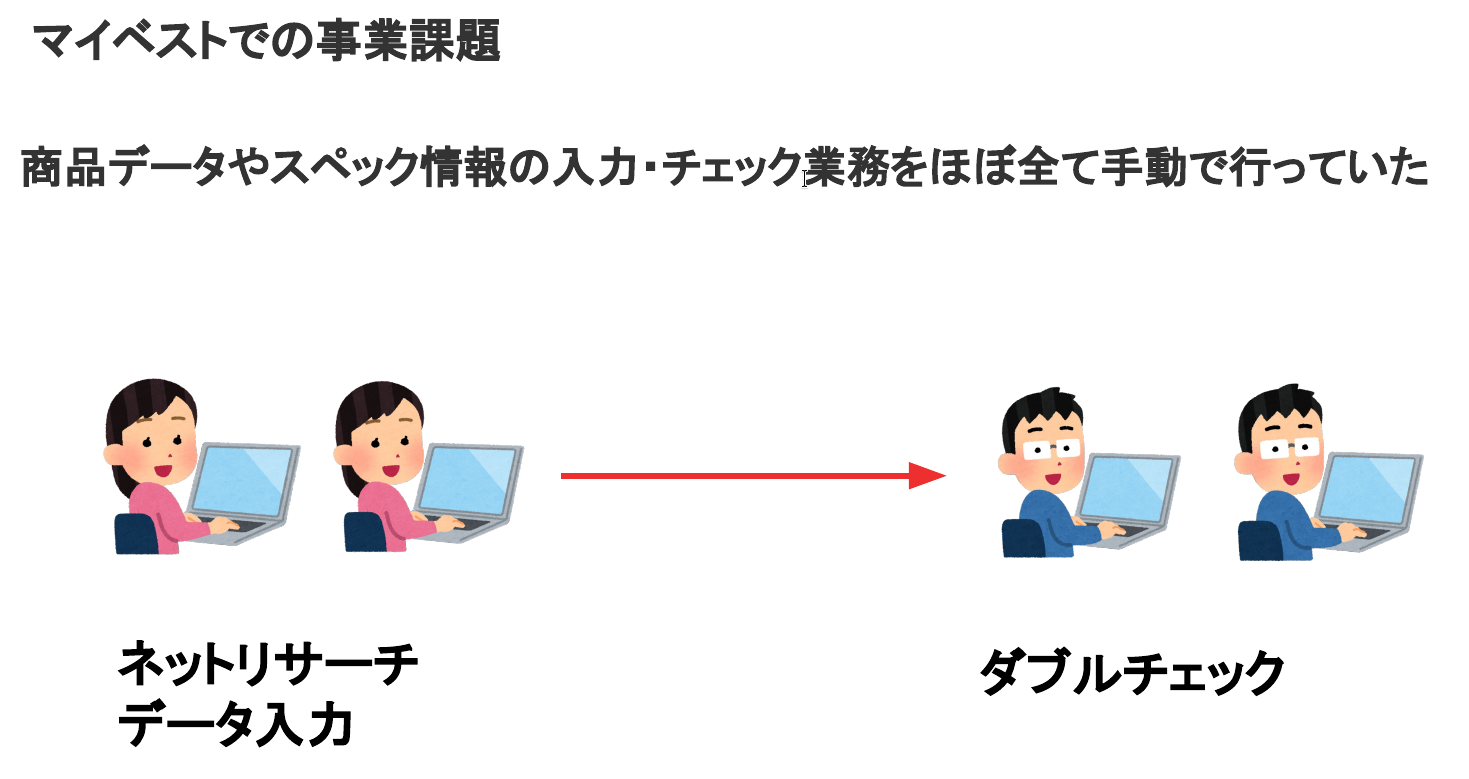

The business problem:

- AI workflows were introduced to automate product research.

- Each product required multiple LLM API calls.

- Each product processing run took ~2 minutes.

- Monthly volume reached ~1.2 million products. もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

The system became I/O-bound due to external API calls.

The natural question arose:

“If we parallelize this, won’t it just get faster?”

Technically: yes. Operationally: not without consequences.

2. The First Attempt: Multithreading with Parallel

The team implemented multithreaded execution using the parallel gem:

Parallel.each(batch.to_a, in_threads: threads) do |product| research_spec_detail_items(product)end As explained in the talk もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…, this allowed switching between:

- Multi-process

- Multi-thread

- Ractor

Given that the workload was I/O-bound (LLM calls), multi-threading was selected.

They configured:

- 1 ECS task

- 8 threads per process もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

And then…

Production errors began.

3. The Error: Connection Pool Exhaustion

They started seeing:

ActiveRecord::ConnectionTimeoutError The presentation shows explicit examples of connection pool exhaustion errors もう並列実行は怖くない__コネクション枯渇解消のための実践的ア….

Why?

Because:

- Each thread performing database operations requires a connection.

- ActiveRecord uses a connection pool per process.

- The pool size was smaller than the number of concurrent threads.

This resulted in threads waiting for connections beyond checkout_timeout.

4. Understanding ActiveRecord Connection Pooling

A key concept emphasized in the talk:

ActiveRecord manages a database connection pool per process (web and background separately). もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

Important mechanics:

- Thread requests a connection from pool

- Performs DB work

- Returns connection to pool

If:

threads > pool size Some threads block and may eventually timeout.

The correct relationship (for batch processing):

pool size = number of threads This was illustrated clearly in the “failed case” vs “correct case” diagrams in the presentation もう並列実行は怖くない__コネクション枯渇解消のための実践的ア….

5. Sidekiq’s Hidden Detail

When they moved execution to Sidekiq, another issue appeared.

Critical insight from the talk:

Sidekiq reserves 1 connection when the process boots. もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

This means:

If you configure:

concurrency: 10pool: 10 You are already short by 1 connection.

Correct formula:

pool size = concurrency + 1 This subtle detail is often overlooked in production Rails systems.

6. Global Connection Budgeting

One of the most valuable sections of the talk describes how to estimate total database connections across infrastructure もう並列実行は怖くない__コネクション枯渇解消のための実践的ア….

They define:

Web connections

ECS tasks × Puma workers × pool size Batch connections

ECS tasks × pool size Background connections

ECS tasks × Sidekiq processes × (threads + 1) Total must not exceed:

RDS max_connections 7. The Conservative Production Rule

A particularly important operational recommendation:

Keep total DB connections below half of RDS max_connections. もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

Why?

During deployments:

- Old and new ECS tasks may temporarily coexist.

- Connection count may double.

Exceeding max_connections causes:

Too many connections and potential downtime.

This is real production engineering — not theoretical scaling.

8. Separating DB Work from I/O

Another major lesson from the talk:

Use:

ActiveRecord::Base.connection_pool.with_connection To ensure connections are:

- Borrowed temporarily

- Returned immediately after DB work もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

And crucially:

Separate:

- DB operations

- External API calls

If a thread performs:

DB queryLLM call (2 seconds)DB write And keeps the connection open during the LLM call, you are artificially exhausting the pool.

Instead:

- Perform DB read

- Release connection

- Call external API

- Re-acquire connection only when needed

This dramatically reduces pool pressure.

9. Long Transactions Are Dangerous

The talk also highlights:

- Long-running transactions increase lock contention.

- Holding connections during external calls is harmful.

- Uploading to S3 inside transactions caused bottlenecks. もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

Solution:

- Minimize transaction scope

- Move heavy I/O outside transaction

- Profile at method-level using rack-lineprof もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

10. Applying Amdahl’s Law

They measured I/O ratio (~90% of workflow time) もう並列実行は怖くない__コネクション枯渇解消のための実践的ア….

Using Amdahl’s Law:

- Increasing threads has diminishing returns.

- They capped at 8 threads per process.

- Beyond that, scale horizontally instead.

Sidekiq official recommendation was also referenced:

Keep concurrency ≤ 50 per process もう並列実行は怖くない__コネクション枯渇解消のための実践的ア….

11. Final Results

After:

- Correct pool sizing

- Proper Sidekiq configuration

- Global connection budgeting

- I/O separation

- Profiling and optimization

They achieved:

- 120 concurrent batch execution

- From 3,500 products/day → 450,000 products/day もう並列実行は怖くない__コネクション枯渇解消のための実践的ア…

- Sidekiq at 50 concurrency without impacting other jobs

This is a 100x-scale architectural improvement.

12. Core Engineering Takeaways

From this Kaigi on Rails 2025 presentation, we extract several durable lessons:

1️⃣ Parallelism requires connection math

Threads without pool sizing is production debt.

2️⃣ Sidekiq needs pool + 1

Always account for boot-time reserved connection.

3️⃣ Budget connections globally

Think at infrastructure scale, not per process.

4️⃣ Keep under half of max_connections

Plan for deployment overlap.

5️⃣ Separate DB from I/O

Never hold DB connections during external API calls.

6️⃣ Measure before scaling

Use profiling and I/O ratio measurement.

Conclusion

Parallel execution in Rails is not dangerous.

Unplanned parallel execution is.

片田 恭平’s presentation at Kaigi on Rails 2025 もう並列実行は怖くない__コネクション枯渇解消のための実践的ア… demonstrates that safe concurrency in Rails is:

- Mathematical

- Architectural

- Operational

- And deeply connected to infrastructure design

In modern Rails systems — especially those integrating AI workflows, background jobs, and external APIs — connection pool design is not an implementation detail.

It is a first-class architectural concern.