Lessons from Kaigi on Rails 2025 on SSE and Async

Modern Rails applications increasingly depend on external systems: third-party APIs, background services, data pipelines, and—more recently—AI and LLM inference. While Rails itself continues to evolve in performance and concurrency, I/O latency remains largely unavoidable in many real-world scenarios.

At Kaigi on Rails 2025, a Japanese conference dedicated to deep, production-level Rails topics, Taiki Kawakami (moznion) presented a session titled:

“Tackling Inevitable I/O Latency in Rails Apps with SSE and the async gem”

The talk addressed a practical and often under-discussed question: When latency cannot be eliminated, how can Rails applications remain responsive and trustworthy from the user’s perspective?

This article summarizes the core ideas of the session and expands on them with illustrative Rails examples, inspired by the architectural patterns discussed during the talk.

Dealing with Unavoidable I/O Waits: Leveraging SSE and Async Gems in Rails Apps

When developing and operating practical, commercial Rails applications, you often encounter situations where slow I/O degrades the end-user experience.

The causes vary: simply slow database lookups, aggregation, or updates running online; API communication between components via microservices; or, increasingly common, waiting for inference from AI (LLM) service integrations.

Such I/O waits often have limited optimization potential and tend to be baked into systems as “unavoidable latency.”

In this session, drawing on insights gained from developing multiple features integrating Rails with LLMs, we’ll share concrete tips and practical techniques for using SSE (server-sent events) and the Async gem together. This approach ensures end-user experience isn’t sacrificed—or even improves—even in applications where I/O is inherently slow (i.e., situations with little room for speed optimization).

Primarily targeting engineers handling (or interested in handling) I/O-heavy processing or external service integrations in Rails, we’ll deliver asynchronous architecture implementation techniques you can apply starting tomorrow.

Additionally, we hope to share our contributions and feedback to OSS made throughout this series of activities.

When Optimization Is Not Enough

Rails developers are well-versed in performance techniques: caching, background jobs, indexing, batching, and query optimization. However, as moznion emphasized during the session, many delays are not under the application’s control:

- Remote API calls

- Cross-service RPC

- OCR or image processing

- AI / LLM inference

- Long-running external workflows

In these cases, the problem is not raw speed, but lack of visibility. A blank screen or a spinning loader provides no assurance that progress is being made. Users may refresh, abandon the page, or assume the system is broken.

The key idea presented at Kaigi on Rails is simple but powerful:

If latency is inevitable, make it observable.

Server-Sent Events as a UX Strategy

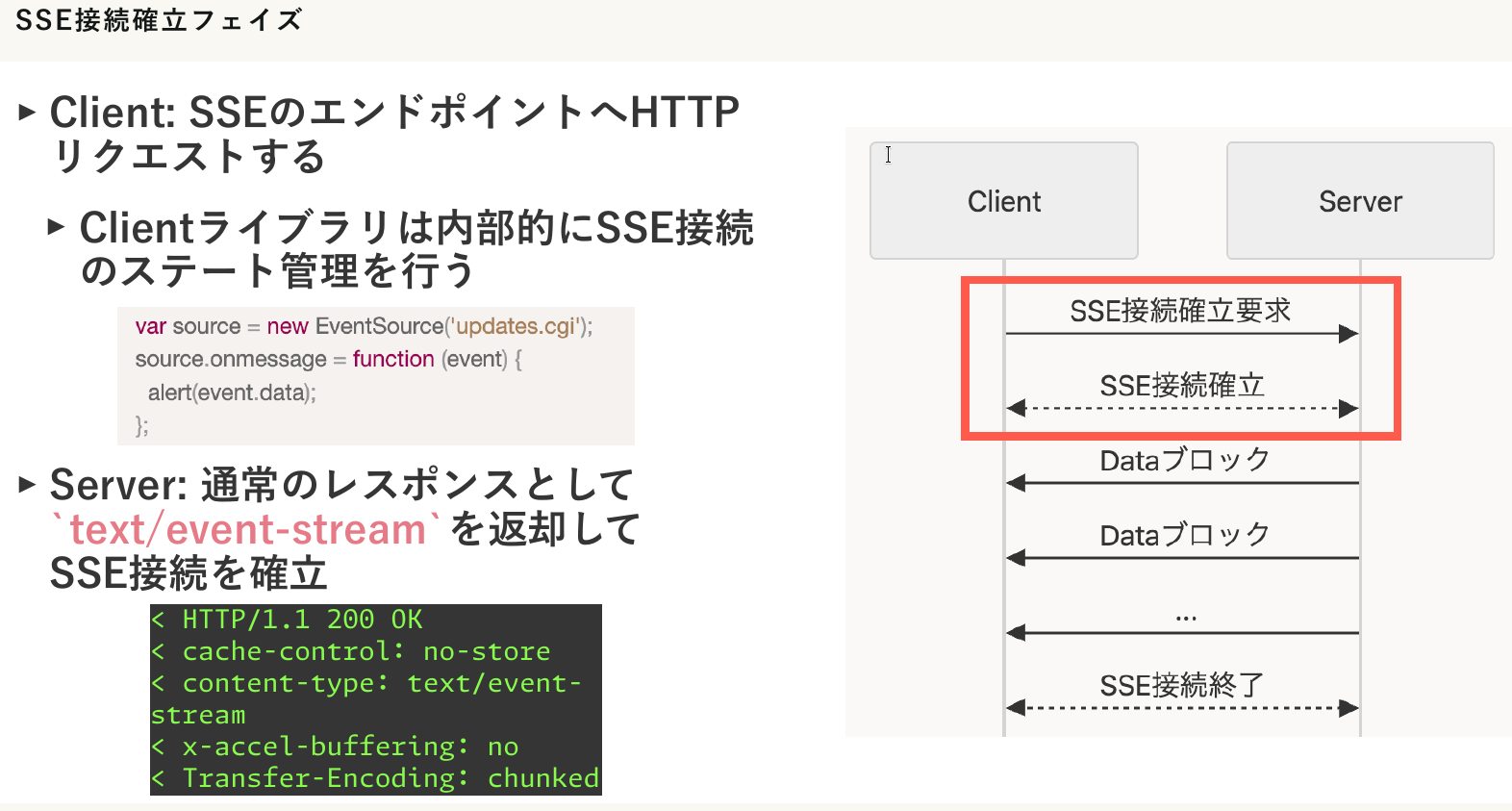

Server-Sent Events (SSE) provide a lightweight mechanism for streaming updates from the server to the client over a persistent HTTP connection. Unlike polling, SSE allows the server to push information as it becomes available.

In the talk, SSE was framed not as a networking trick, but as a user-experience strategy: show progress, intermediate states, and completion signals while work is ongoing.

Typical use cases discussed include:

- Progress indicators for long-running operations

- Streaming logs or status messages

- Incremental updates from background processing

- Real-time feedback during AI-driven workflows

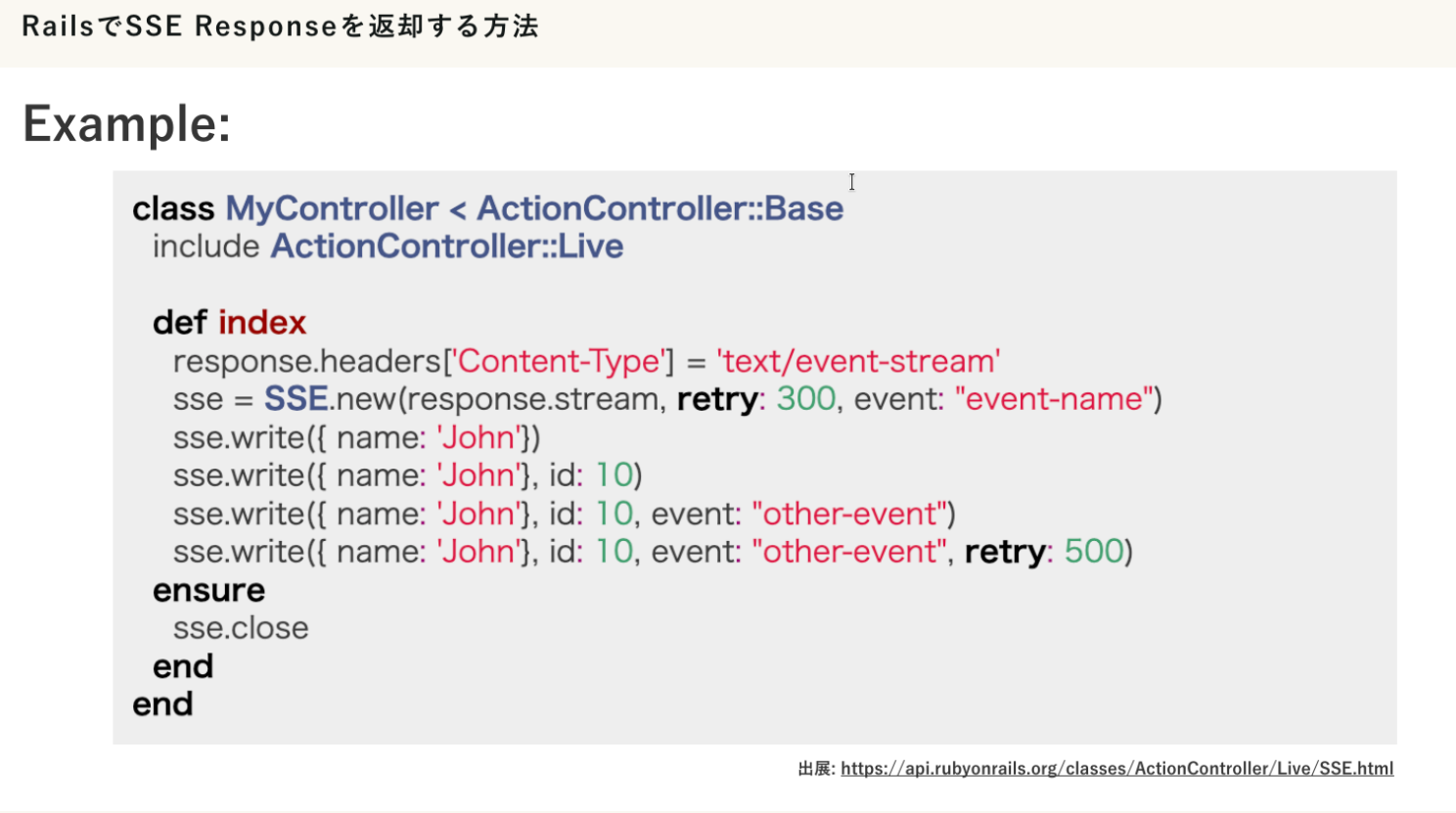

Rails supports SSE natively via ActionController::Live, making it accessible without external infrastructure.

Example: Streaming Progress with SSE in Rails

The following example illustrates how a Rails controller can stream progress updates using SSE. This code is inspired by the patterns discussed in the Kaigi on Rails session, not copied from the slides.

class ProgressController < ApplicationController include ActionController::Live def show response.headers["Content-Type"] = "text/event-stream" response.headers["Cache-Control"] = "no-cache" sse = SSE.new(response.stream) begin 5.times do |step| sleep 1 # Simulating I/O-bound work sse.write( { step: step + 1, status: "processing" }, event: "progress" ) end sse.write({ status: "completed" }, event: "done") ensure sse.close end endendThis approach does not reduce execution time, but it dramatically improves perceived responsiveness by keeping the client informed.

Client-Side Consumption with EventSource

On the browser side, SSE is supported natively via the EventSource API. No additional libraries are required.

const source = new EventSource("/progress");source.addEventListener("progress", (event) => { const data = JSON.parse(event.data); console.log(`Step ${data.step}: ${data.status}`);});source.addEventListener("done", () => { console.log("Processing completed"); source.close();});This model eliminates polling overhead and ensures updates arrive as soon as the server emits them—an advantage highlighted during the conference when comparing SSE to traditional request-response flows.

Why Async Matters for SSE

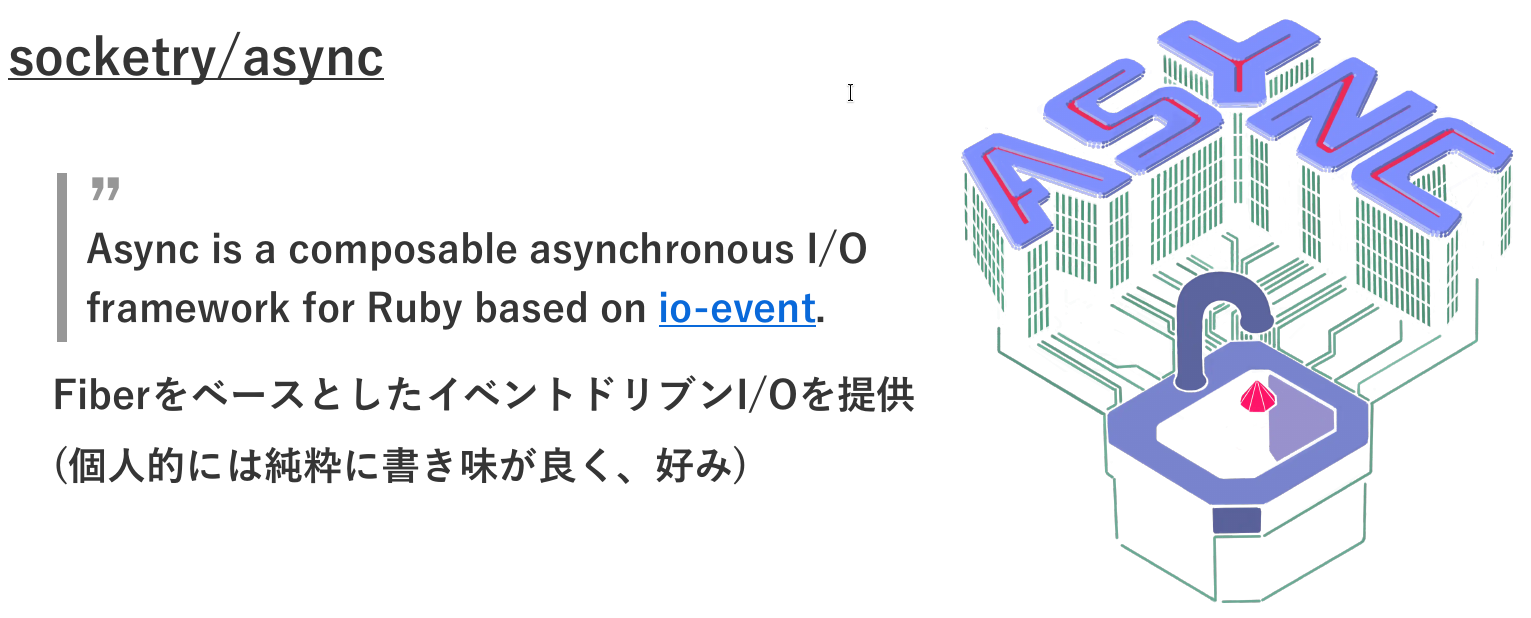

A central technical theme of moznion’s talk was the synergy between SSE and asynchronous execution using Ruby’s async gem.

For I/O-bound workloads, Fiber-based concurrency allows multiple operations to progress concurrently without blocking the main execution flow. This is particularly relevant when:

- Multiple external calls must run in parallel

- Partial results can be emitted incrementally

- The server should remain responsive under load

Example: Parallel I/O with Async (Illustrative)

require "async"queue = Queue.newAsync do |task| 3.times do |i| task.async do sleep rand(1..3) # Simulated external call queue << { task: i, status: "finished" } end endendIn a real application, results placed on such a queue can be streamed through SSE as they arrive—matching the “parallel execution with progressive feedback” model described at Kaigi on Rails.

Production Realities and Pitfalls

One of the most valuable aspects of the session was its focus on what breaks in real systems:

- Reverse proxies buffering SSE responses

- Missing or incorrect headers (Cache-Control, X-Accel-Buffering)

- Middleware that buffers response bodies and breaks streaming

- Challenges integrating SSE with OpenAPI tooling

- Risks of mixing ActionController::Live with standard JSON responses

These issues reinforce an important takeaway from the talk:

SSE works best when treated as a first-class delivery mechanism, not an afterthought.

Conclusion

The Kaigi on Rails 2025 session by moznion highlights a shift in how Rails developers should think about performance. Instead of treating latency purely as a technical failure, the talk reframes it as a communication problem between the system and the user.

By combining Server-Sent Events with asynchronous I/O, Rails applications can remain transparent, responsive, and trustworthy—even when delays are unavoidable.

For teams building modern Rails systems that integrate external services, AI workflows, or long-running processes, the patterns discussed at Kaigi on Rails offer a pragmatic and production-ready approach to improving user experience without rewriting core architecture.